Artificial Intelligence has been around for more than 50 years. The term “Artificial Intelligence” was first used in 1956 by John McCarthy. However, the use and impact of Artificial Intelligence and Machine Learning have exploded in recent years. This is mainly based on hardware development, which has provided the necessary performance. However, advancements in algorithm and data availability have also played a significant role. This article gives an overview of past and current AI development and describes some of the benefits and concerns of the expected growth.

- Applications of Artificial Intelligence?

- Speed of innovation

- History of Artificial Intelligence

- Levels of Artificial Intelligence

- Different types of Artificial Intelligence

- ChatGPT

- Try out ChatGPT for yourself.

- ChatGPT on how it works

- Risks with AI

- Conclusions

- Do you need help with developing AI solutions?

Artificial Intelligence (AI) has become one of the critical areas of IT development in recent years. Thanks to AI, computers have beaten humans in various games, such as;

- Chess (1997)

- Poker (2008)

- Jeopardy (2011)

- Atari games (2013)

- Alpha Go (2015)—A computer program developed by DeepMind to play the complex board game Go, it beat the world champion in a highly publicised match. However, with entirely different strategies, humans have beaten AI in Go since.

- Texas Hold ’em (2017)

- Dota 2 (2017) is a famous multiplayer online battle arena (MOBA) game in which an AI agent developed by OpenAI defeated some of the top professional players in the world.

- StarCraft II (2018): A real-time strategy game played and mastered by AI agents developed by DeepMind.

- Super Smash Bros. (2019) is a fighting video game in which an AI system developed by Nvidia defeats professional players.

Perhaps more significant than any of these is that AI has recently passed humans regarding the quality of image recognition (2015), and the accuracy of image recognition has continued to improve.

Computers are not really “intelligent” but have only outperformed humans by sheer brute force. They are not really “thinking”. AI systems today are based on statistical and machine learning models, which can achieve impressive results but don’t understand the concepts they are processing.

However, these cases still show that computer solutions are starting to outperform humans in some specific areas. Computers winning trivial games may not significantly impact our day-to-day lives. However, as we shall see in this article, there are other domains where computer solutions are improving and considerably impacting our lives.

Applications of Artificial Intelligence?

Artificial Intelligence is used in areas so diverse as when we search on Google, speak to virtual personal assistants such as Siri, Google Now, Cortana or Alexa in various video games, and make purchase predictions and recommendations on websites and music services.

AI is also used for fraud detection, to produce simple news updates such as financial summaries and sports reports, for security surveillance, and even on smart home devices. Further, for stock trading, in medical diagnostics for various military applications and, of course, for self-driving cars. In most of these cases, we are unaware that AI is involved.

Specific applications of AI have existed since the late ’50s, and the actual application of the technology seemed like fusion always seemed to be in the distant future. However, in the last five years, speed has accelerated dramatically.

Speed of innovation

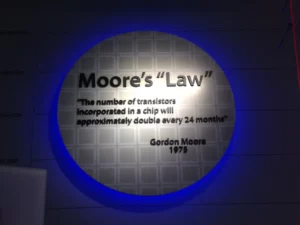

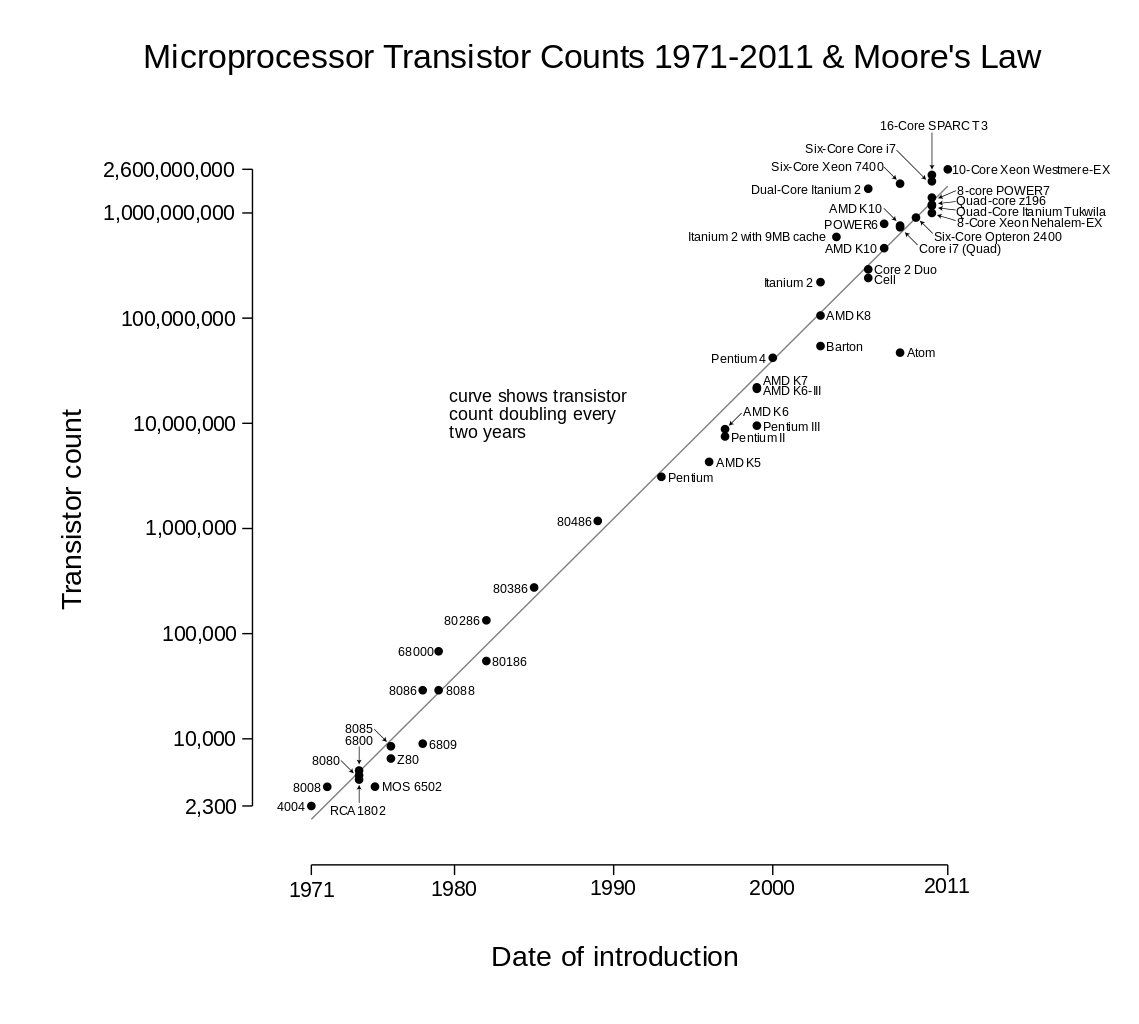

A lot of technology development has been exponential. In particular, computer development is based on doubling the number of transistors per area every two years. The speed of innovation related to Artificial Intelligence can be said to have outpaced Moore’s law (that the number of transistors per square inch on integrated circuits had doubled every two years. Gordon Moore predicted this trend would continue into the foreseeable future.

This development has driven the performance of computers in a never-ending exponential growth). AI using computers is part of the same trend. Hence, AI development is going faster than most of us realise. Human capability has increased over centuries and decades but much more linearly. It is worth remembering a quote from Bill Gates “We always overestimate the change that will occur in the next two years and underestimate the change that will occur in the next ten. Don’t let yourself be lulled into inaction.”

Artificial Intelligence is expected to impact our lives significantly in the following decades. Expected breakthroughs include autonomous cars, transportation, and automated manufacturing, and some reports suggest that half of the job titles known today may be gone within 20 years. Therefore, understanding what artificial intelligence is and what consequences the expected development may cause is no longer a subject for an obscure academic community; it is essential for everyone. This area is still a matter of debate and speculation.

History of Artificial Intelligence

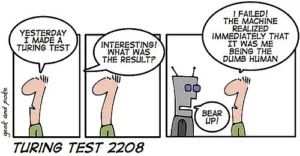

Even though the concept of robots and the foundation of neural networks are older than AI, the idea of Artificial Intelligence is ascribed to Alan Turing, who 1950 published a now-famous paper on Computers and Intelligence, which suggested that it was possible to construct a machine that could think. In this article, he also described what later became known as the famous Turing Test. The Turing Test states that humans should communicate via a network with two other entities (one computer and one human being). If the person interrogates, the other two cannot determine which is the human and which computer should be considered intelligent.

Breakthrough

In 1956, John McCarthy suggested the term “Artificial Intelligence”. During the next few years, scientists from fields as diverse as mathematics, psychology, engineering, economics and political science discussed the concept of an artificial brain. The real breakthrough of AI happened from 1990 onward, and we are now in a formidable revolution of using machine learning and other concepts. While many applications may not be visible to the average person, the following years and decades will be disruptive.

Science fiction literature traditionally suggested robots with human-like characteristics. That is no longer the mainstream expectation.

Levels of Artificial Intelligence

The AI community defines three levels of AI (It should be noted that there is still ongoing discussion and disagreement within the AI community about these definitions and their implications):

- Narrow or weak AI (ANI) means using computers and software to resolve simple discrete tasks such as driving a car, voice, pattern, or image recognition, text analysis, search, playing a game, or any other current application of AI, including all of the above-described applications. Note that narrow or weak AI generally exceeds human capability within its limited area.

- General or Strong AI (AGI) can conduct any generic cognitive task a human can do. This level refers to when the AI solution is as intelligent as a human being. It does not yet exist.

- Artificial Superintelligence (ASI) is a hypothetical level at which an AI solution can beat humans in any field, including science, innovation, wisdom, and social capability. Note that the difference between AGI and ASI is minor. When computers reach the AGI level with access to the entire Internet, they have already passed humans. The moment when computer intelligence gives human capability is often called “The Singularity.”

Different types of Artificial Intelligence

Artificial Technology is an umbrella term. In reality, it is a broad range of different approaches. According to Pedro Domingos, there are five AI paradigms which are called “tribes” in a book on Machine Learning – The Master Algorithm.:

- Symbolists – Uses inverse deduction and decision trees

- Connectionists – Uses Neural network (More recently called Deep Learning)

- Evolutionists – Uses Survival of the fittest programs

- Bayesians – Uses Statistical analysis

- Analogies – Uses algorithms which use similar solutions to learn.

Different types of AI technologies and applications

Various diverse technologies and techniques jointly make up the area of Artificial Intelligence. Here are some examples:

- Computer Vision: This field of AI focuses on enabling computers to interpret and understand visual information, such as images and videos. Computer vision has many applications, including image classification, object detection, and image segmentation.

- Expert Systems use a knowledge base (a database of previous cases) to infer and present knowledge.

- Fuzzy logic (where rather than exact values, degree of truth is used)

- Generative Adversarial Networks (GANs): These types of neural networks use two networks, a generator and a discriminator, to generate new data. The generator creates new data, while the discriminator evaluates the generated data and provides feedback to the generator to improve its accuracy. GANs are used for many applications, including image generation, style transfer, etc.

- Grammatical inference, various techniques, such as Genetic Algorithms (simulating biological modification of genes), Tabu search, MDL (Minimum Descriptive Length), a variety of Occam’s Razor where the most straightforward option is preferred, Heuristic Greedy State Merging, evidence-driven state merging, graph colouring, and constraint satisfaction, have been used.

- Handwriting recognition that can read human handwriting.

- An Intelligent Agent is an independent program that performs some services, such as collecting information. For example, an agent can regularly search the Internet for information you are interested in.

- Natural Language Processing (NLP): This field of AI focuses on enabling computers to understand, interpret, and generate human language. NLP has many applications, including sentiment analysis, machine translation, and text generation.

- Neural Networks, and Deep Learning, are described in the next section and are used in many modern AI types.

- Optical Character Recognition (OCR)

- Pathfinding techniques such as Neural Networks, Genetic Algorithms and Reinforcement Learning have been used.

- Reinforcement Learning: This type of machine learning uses rewards and punishments to train AI systems to make decisions and solve problems. It has been applied in various fields, including robotics, gaming, and autonomous vehicles.

- Sentiment analysis: The process of determining whether a piece of writing is positive, negative, or neutral. It’s also known as opinion mining, which involves deriving a speaker’s opinion or attitude.

Deep Learning

In 1957, an algorithm called Perceptron was developed. It was what was later to be known as neural networks. It is called a neural network since the algorithm’s mechanism closely resembles how our brain cells work.

The first implementation was developed as software for the IBM 704 computer by Frank Rosenblatt at the Cornell Aeronautical Laboratory, funded by the US Office of Naval Research. Later, it was developed into specialised hardware. It tried implementing a straightforward artificial variety of brain neurones and had simple image recognition capabilities.

In the news at the time, it was described as if, in later versions, it would “be able to walk, talk, see, write, reproduce itself and be conscious of its existence.” While that has not yet happened, the same technology (neural networks) is used in many pattern recognition algorithms. The same basic algorithms have been further developed and enhanced. The algorithm is at the centre of the enormous improvement in computer performance that has made the last few years of development possible.

Recent applications of AI

Machine learning using artificial neural networks, so-called deep understanding, has been a formidable revolution over the last few years.

- Self-driving cars or autonomous vehicles

- AI predicts with 90% reliability what will be sold in the next 30 days and makes automatic purchases accordingly.

- Take a picture of a part to find a replacement part

- Protecting your heart

- One of our clients, Artios, uses machine learning to improve its customers’ positions in Google search results.

- DALL-E 2 is a state-of-the-art computer vision system that generates unique images from textual descriptions.

- AI Text Classifier is an AI model that can classify text data into various categories, making it useful for tasks like sentiment analysis and topic classification.

- Whisper is an AI-powered system that generates private messages that mimic a person’s style. Alignment is a technology that allows AI models to understand the intention behind language, making it possible to build more human-like conversational AI systems.

ChatGPT

OpenAI released ChatGPT, a very advanced text analysis tool, on November 30, 2022. It is challenging for a human to assess that it is not a human being on the other side. However, other AI tools are available online to determine the probability that a machine wrote the text.

ChatGPT is a language model developed by OpenAI, a leading artificial intelligence research laboratory. The model is based on the GPT (Generative Pretrained Transformer) architecture, which uses deep neural networks to generate human-like language. Unlike earlier AI technologies, ChatGPT can generate grammatically correct, semantically meaningful, and contextually relevant text. This makes it well-suited for various applications, such as language translation, question answering, and creative writing.

The algorithm used by ChatGPT is called GPT-3. It is the latest version of the GPT architecture and is one of the most advanced AI technologies currently available. With its massive scale and powerful language generation capabilities, GPT-3 can potentially transform many industries, including customer service, marketing, and education. Despite its impressive capabilities, GPT-3 is still far from perfect, and ongoing research aims to improve its accuracy and reduce the potential for bias.

Generative Pre-trained Transformer 3 (GPT-3) is a cutting-edge artificial intelligence language model developed by OpenAI. GPT-3 is a deep learning model that uses an attention mechanism to process and generate text. The “pre-training” part of its name refers to the fact that the model has been trained on a large corpus of text data, allowing it to generate text similar to the text it was trained on. The “transformer” part of its name refers to the model’s architecture based on the Transformer architecture. It is a neural network well-suited for processing sequential data, like text.

Try out ChatGPT for yourself.

ChatGPT can be used for a lot of different applications. It is available for anyone to use – Here is the link! You can ask it questions about almost any subject (its data material is presently limited to texts written before 2022. It works in most languages. It can write texts based on your requests and even translate between languages, write computer code, or even check why a particular code does not work. Microsoft has now released a version of ChatGPT as part of its search engine Bing. Open AI also provides an API so companies can use the technology for various applications. However, watch out. This is an experimental technology, and ChatGPT still makes mistakes.

ChatGPT on how it works

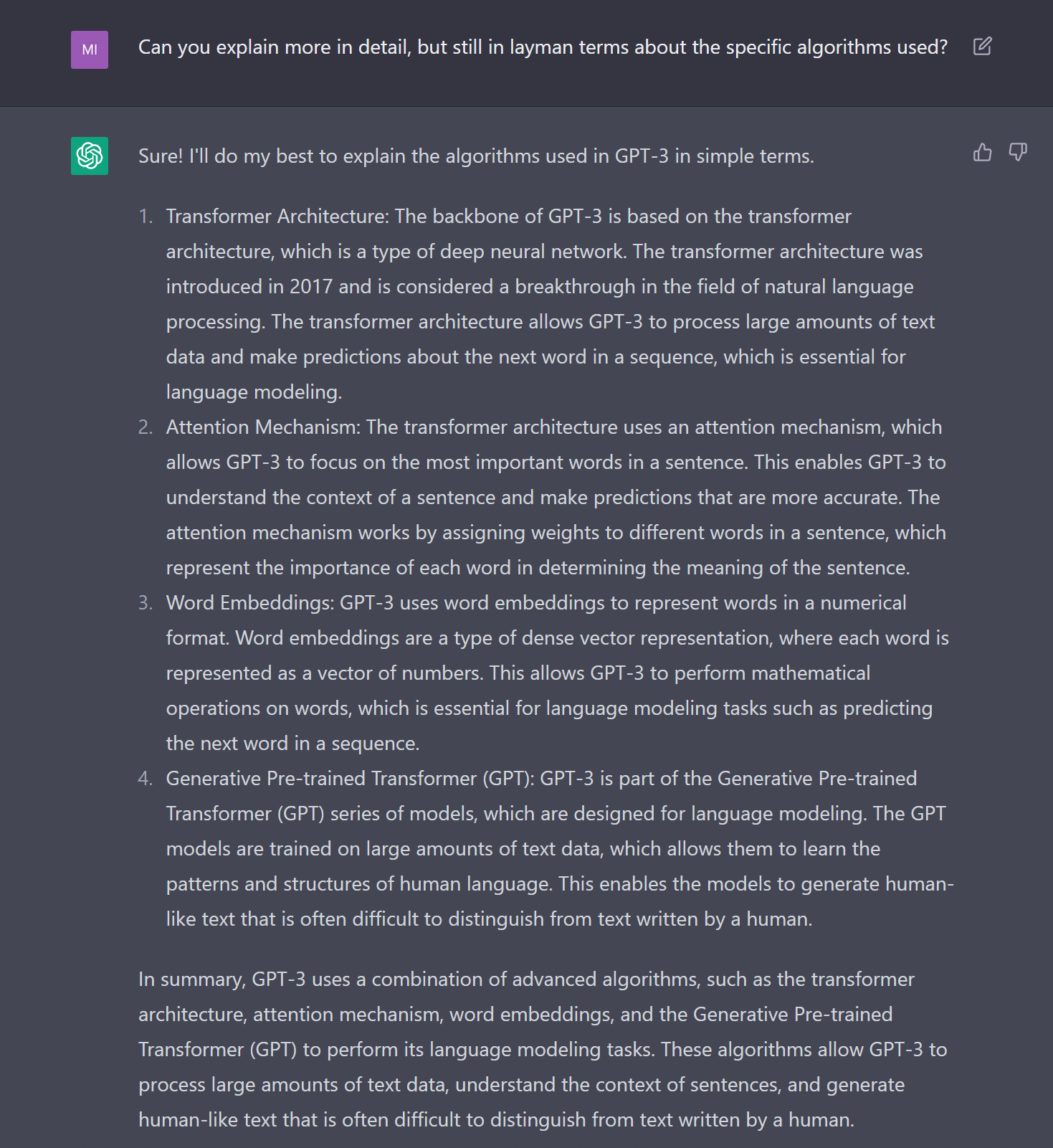

I asked ChatGPT to explain what algorithms are used and how they work, and here is its answer:

This article was written in 2017, but on February 13, 2023, apart from the question above, I also asked ChatGPT to review the article and suggest improvements. Overall, it meant some games after 2015 where AI has beaten humans, the latest technologies, and some minor corrections, which I have used to improve the article. It also ended with a rider – “In general, the article provides a general overview of the history and current state of AI, but it would be helpful to take its predictions and conclusions with a grain of caution and seek out more in-depth and reliable sources for further information”. I have made minor adjustments to my text based on the feedback.

In my experience, it is possible to use ChatGPT as an intelligent assistant when writing texts. However, since it occasionally gives wrong information and sometimes even makes up facts and presents them confidently, I strongly recommend verifying all texts it gives before using it.

Risks with AI

Government institutions, as well as employers, collect more and more data. When combining analytics and AI, it may be possible to identify risky behaviour. While this may have some positive effects, there are also risks. Many intellectuals are concerned about the potential development of extreme AI. There are a lot of valid reasons to worry. Some public profiles such as Stephen Hawking, Steve Wozniak, Bill Gates, and Elon Musk have each predicted that strong AI could threaten humanity. Philosopher Nick Bostrom has also raised similar concerns.

While their concerns are probably valid, weaker forms of AI are perhaps already a threat when used by the wrong person. While strong AI may take a long time to materialise, there are already indications that AI and Analytics have been used to affect the results of recent elections, including the Brexit referendum and the US presidential election in 2016. Today’s AI algorithms are not necessarily very intelligent since there are indications that Google’s search engine and Facebook have been manipulated into showing propaganda before the severe news.

Elon Musk has raised concerns about AI being used to fight wars. As the development of artificial intelligence progresses, AI implementations will get more intelligent. There may not be one super-intelligence in the world, but many. If these are fighting wars with each other, humanity may undoubtedly be at risk. However, even now, AI used for the wrong purposes is already dangerous.

When will AI take your job?

So far, automation and robots have not taken a lot of jobs. Harvard economist James Bessen looked at the professions listed in the US 1950 census and found that only one position was gone – the elevator operator). Indeed, jobs are disappearing, or perhaps more correctly, the number of people working in certain professions is being reduced.

But new jobs have been created instead, and so far, the threats of unemployment have been severely exaggerated. That may not be the case in the future, and given the increasing number of applications, we may see more risks in the not-so-distant future. However, when only certain parts of the process are automated, the prices may go down, and the demand may increase, and hence, other jobs may be created, reducing the drastic impact, which would be easy to predict.

However, that is only one side of the coin. AI experts themselves are worried. In a survey, they thought that by 2032 half the driving on motorways would be done by self-driving cars. Job losses may also come in sectors which are not expected. One article suggests that lawyers are a sector ripe for automation. AI algorithms can easily replace such research work since much manual labour is currently spent searching through old cases.

How will society change?

On another level, automation, access to information and prices, and cutting off intermediaries likely have a significant deflationary impact on mature markets and may well be one of the reasons for the current low interest levels in the Western World. This transformation may not be only due to Artificial Intelligence. But AI increasingly powers simple applications such as using Google Maps to find your route from A to B.

Society will change, and policymakers must respond to these changes. The expected AI revolution may be as dramatic as the industrial revolution. Elon Musk has suggested that we consider the basic income provided by governments since the number of unemployed who cannot get traditional jobs will increase dramatically as automation rises over time.

Jobs that are most likely to become obsolete

A few studies have listed the likeliness of specific job categories being replaced by computers.

What can we learn from this? The more streamlined and process-oriented a job is, the more likely it will become redundant. Among the 5% most likely to be automated are Telemarketing, Insurance Underwriters, Watch repair, data entry, Umpires, Referees, drivers, bookkeeping, accounting and payroll, paralegals, and Legal Assistants. On the other hand, recreational therapists were the least likely job profile to be replaced. However, experts assume that Artificial Intelligence will beat us in every task within 45 years.

Conclusions

Due to the exponential development of computer hardware, artificial intelligence solutions have led to significant breakthroughs in the last few years. Experts assume we are beginning a new industrial revolution where automation will make many jobs obsolete. Governments must prepare for a society where not everyone has a traditional job. While this development may look scary, it also means opportunities for a better life for all of us. There are threats, but if they can be managed, computers will help us all to live a better life even in the future.

Mikael Gislén is the Managing Director of Gislen Software, a Swedish-owned Indian Software development company. Mikael started the company in 1994. Gislen Software provides high-value software development services to Scandinavian, UK and US clients.

Do you need help with developing AI solutions?

If you need help using Artificial Intelligence in your solutions, don’t hesitate to contact us at Gislen Software. We have experts with the necessary skills and experience. Whether you face technical challenges or need strategic guidance, we are here to help you reach your goals. Contact us today to discuss how we can support your upgrade!

The article was originally published on June 5 updated and republished in December 2022, and again in February 2023 to cover the recent developments in Artificial Intelligence.